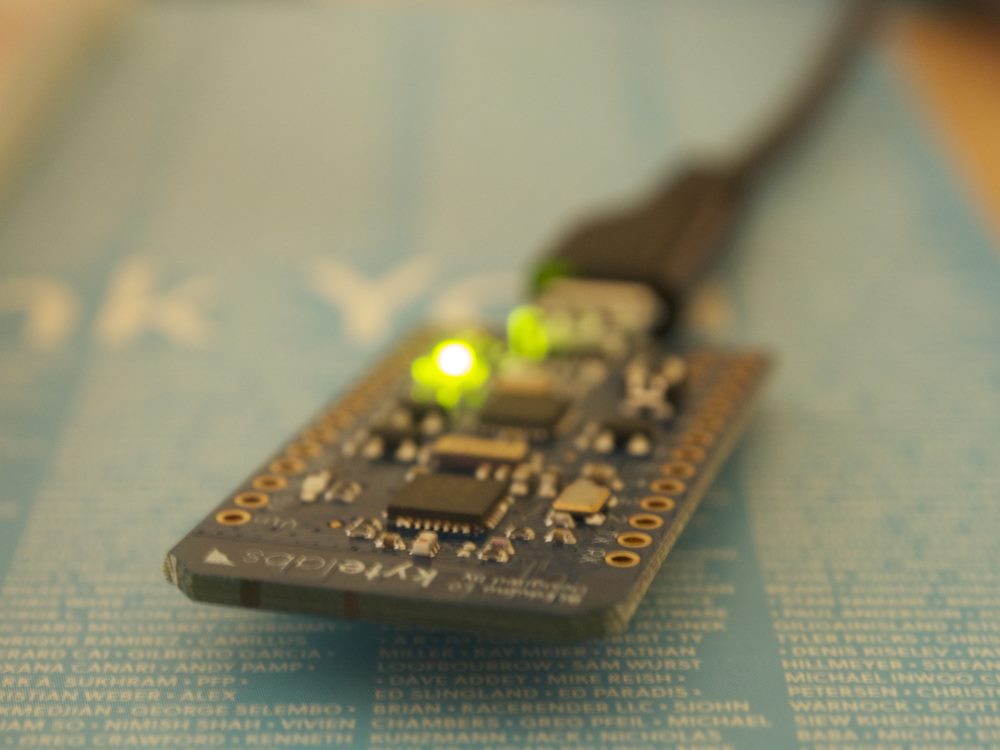

About a year ago I pledged $39 for the Bleduino kickstarter and despite it arriving on my mailbox a few months ago, only today did I have time to start playing around with it. I also got a DHT22 temperature sensor, which I will use with the device. A quick disclaimer, I’m a beginner in Arduino and electronics and would describe my soldering skills as non-existent. Hence you will find no soldering in this post.

Author Archives: Loune

Node.js fun with the same typescript module spread over several js files

I’m building a web application with Node.js and typescript for the first time and have discovered some “fun” quirks with how Node.js modules and typescript modules interact with each other. I use the term interact loosely as they don’t actually talk to each other, and in fact each do their own separate thing. In this post, I’ll offer some tips on how I untangled this.

Node.js modules are based on the CommonJS modules architecture, using require and exports. Typescript has language level support for modules, which are basically glorified namespaces. Typescript also has support for AMD, which is yet another module loading specification. Each of these patterns all create a very confusing Javascript ecosystem.

Tying together Typescript’s module system and Node.js requires a bit of glue code. First up you need to ensure that you’ve told typescript to allow the exports and require keywords. This can be easily done by going to this helpful library of typescript module definitions and download node.d.ts. Next up import the definition into your ts file by putting this line at the top. We put the node.d.ts in a sub folder called typescript-node-definitions.

///<reference path='typescript-node-definitions/node.d.ts'/>

After that exports and require should now be recognised by the Typescript compiler. You should be able to use nodejs modules by calling require inside your ts files. And you can export with ease by doing at the end of your ts file:

exports.HomeController = MyWebSite.HomeController;

You can then reference the compiled js file normally in your main file:

var MyWebSite = require('./HomeController.js');

Most likely, you’ll have several files/classes that need to be under the same namespace, so a helper method can be used to corral them under the same object namespace.

[js]

function requireall() {

var cns = { };

for (var i = 0; i < arguments.length; i++) {

var ns = require(arguments[i]);

for (var o in ns) {

cns[o] = ns[o];

}

}

return cns;

}

[/js]

And replace require with

var MyWebSite = requireall('./HomeController.js', './AboutController.js');

Trouble occurs when you try to make one class per file. You have your module distributed off multiple ts files and one of them is a sub class which references a base class. You can add a reference to the base class:

///<reference path="BaseController.ts"/>

This satisfies tsc, but when you run the node you’ll get an error:

__.prototype = b.prototype;

^

TypeError: Cannot read property 'prototype' of undefined

That’s because node.js has no concept of typescript references. We need to use require to import the other file. Unfortunately, the module namespace we need to import into is recognised by the typescript as the module and any attempt to assign to it will result in a compiler error. This is where things need to get a bit hacky. We need to inject the base class into the namespace without triggering the compiler alarm. Underhandedly, we can use eval to achieve this. Put this under the module blabla { line and modify the __importClassName and __importModuleName variables:

var __importClassName = "HomeController"; var __importModuleName = "MyWebSite"; eval(__importModuleName + "." + __importClassName + " = require(\"./" + __importClassName + ".js\")." + __importClassName + ";");

This will allow node.js to resolve the base class and the whole thing to run!

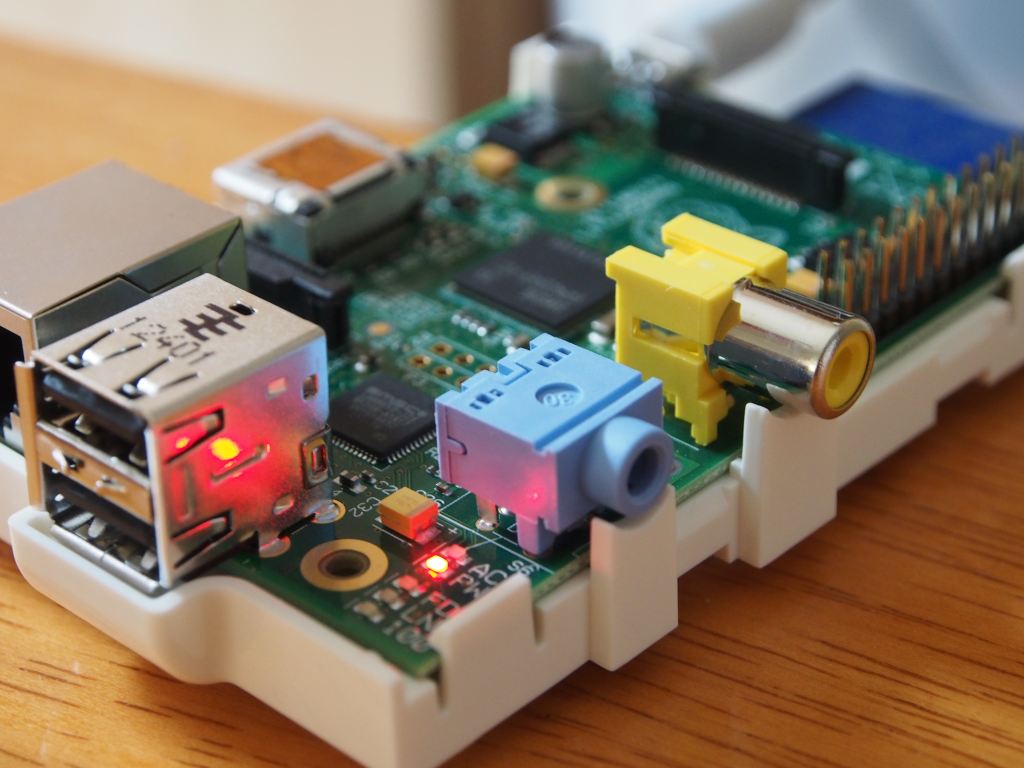

Raspberry Pi as a XBMC MythTV front end

I know I’m probably late to the game, but I just got myself a Raspberry Pi. Currently I have a Myth TV front end in the living room run by an old laptop, my goal is to replace it. Here I share some of my experiences with the pi.

Debugging a segmentation fault on dnsmasq and fritzbox

I had an issue with my FRITZ!Box, running freetz and dnsmasq, where by dnsmasq would start and then crash after a few seconds. Since it was working fine before, I figured this must be an environmental issue. dnsmasq Log files were not showing anything helpful. That’s when I remembered strace. strace is a tool which displays all system calls a process makes. It could be used as a debugging tool to gain more insight into what the process was doing at the point which it crashed. So I ran strace on the process (before it crashed, so I had to be quick).

[code]

# strace -p 7845

[/code]

And I got my answer:

[code]

open("/var/tmp/multid.leases", O_WRONLY|O_CREAT|O_TRUNC|O_LARGEFILE, 0666) = -1 EACCES (Permission denied)

— SIGSEGV (Segmentation fault) @ 0 (0) —

Process 7845 detached

[/code]

It was trying to access a file which had corrupted permissions, which was probably a situation not handled correctly in code. Resetting the permissions on that file fixed the issue.

501 5.5.4 Invalid Address when including Sender Name on Windows Server + PHP mail + IIS SMTP or MS Exchange

An interesting issue I came across the other day was that PHP was complaining giving a 501 5.5.4 Invalid Address error when trying to send email. The server uses IIS SMTP and the sender specified using the From: header ie

From: Me

Just specifying the email by itself works fine:

From: [email protected]

It turns out that this is a conflict between IIS SMTP and PHP. You need to specify a from email address separately by setting the ini configuration sendmail_from (for the MAIL FROM: command I presume) ie.

[php]

function mail2($from_address, $from_name, $to_address, $subject, $message, $headers) {

$old_sender = ini_get(‘sendmail_from’);

ini_set(‘sendmail_from’, $from_address);

$headers = "From: " . $from_name . " <" . $from_address . ">\r\n" . trim($headers);

mail($to_address, $subject, $message, $headers);

ini_set(‘sendmail_from’, $old_sender);

}

[/php]

Presumably this is not an issue on UNIX as the external sendmail program handles delivery.

Javascript ArrayBuffer – Binary handling in javascript

As javascript and HTML5 venture into applications never contemplated, there was one basic feature sorely missing from its API line up. The feature I’m talking about is of course native support for binary. Currently, if you want to manipulate binary in javascript, you have to make do with an array of numbers to store each byte in the “byte array”. This was horribly ineffecient given that each byte of number type probably occupied 4 or 8 bytes, so you would be using 4 or 8 times the space. Another alternative is to use base64, but that meant your binary blob was 33% larger and was only good for serialisation and storage.

This changed however, with the advent of WebGL, the straw that broke the camels back. WebGL required efficient processing of byte arrays which herald the creation of the ArrayBuffer. Although designed for WebGL, the ArrayBuffer can be used anywhere in your javascript. WebGL is currently available for Firefox 4 and Chrome. The ArrayBuffer allows you to allocate a opaque chunk of memory. To manipulate the buffer, we have to “cast” or map the array to a Typed Array. There are various typed arrays such as Int16Array, Float32Array, but the one that interests us is probably Uint8Array, allowing us to view our ArrayBuffer as a byte array.

[js]

var buf = new ArrayBuffer(1024);

var bytes = new Uint8Array(buf);

for (var i = 0; i < bytes.length; i++) {

bytes[i] = 0xFF;

}

[/js]

The Typed Array may seem like a foreign concept in a dynamic-typed language such as javascript, but it’s neccesarry to provide good performance for binary handling. If you try to assign a Uint8Array element a number greater than 255, it will be truncated, and if you put a string, it’ll become 0, proving that this is more than just your average JS array.

Already there is talk of using these in HTML File API and Web Sockets API. WebSockets currently only support plain text UTF-8 frames, which means you can’t talk binary over the wire. Given that a lot of exisiting protocols are binary, this was a severely limitation. A web based proxy websockify that proxies native protocols into websocket frames needs the base64 each frame. Array Buffer support would allow us to do away the base64 overhead.

MythWeb and Flash streaming

For a while I’ve heard of this mythical flash streaming that is now supposedly built into MythWeb. However I have yet to see it anywhere in the website. What gives? So I decided to get to the bottom of this. There’s a wiki article on MythTV web which describe how it’s done, but it’s said to be outdated and pointed to MythWeb’s wiki page, which only mentions it’s been rewritten to enable Flash streaming. So how do I enable it?

After digging in source and finding various shenanigans with the WebFLV_on variable, the answer revealed itself in the preference pages of MythWeb (Settings > MythWeb > Video Playback). There is a tick box to “Enable Video playback”. However, it says it requires ffmpeg with mp3 support.

I’m using gentoo so installing ffmpeg was just a matter of emerge -av ffmpeg. After installing, I can finally tick the “Enable Video Playback”. However arriving at the preference pages and tried playing on the flash player, a new stumbling block appeared. It says that the pl/stream/bla/bla.flv is not found. Navigating to it manually revealed a 500 Internal Server Error.

Since I’m using Lighttpd, I discovered that it has a deficiency logging CGI errors. The error.log was useless and I ended up running Lighttpd in non-daemon mode (/usr/sbin/lighttpd -D -f /etc/lighttpd/lighttpd.conf) and looked at the errors spat out on to the console. Turns out that it requires Math::Round which I haven’t installed.

The story is actually a bit more cumbersome as before everything I needed to enable CGI on lighttpd for perl to work and to get around a streaming path issue, I modified $stream_url in /includes/defines.php to not double slash on my root, but I know everyone just wants to see what I wanted to see when I embarked on this journey – a screenshot of it in action:

It’s by no means perfect with video being low quality, lack of seeking and some high CPU usage – but it works!

Match any character including new line in Javascript Regexp

It seems like the dot character in Javascript’s regular expressions matches any character except new line and no number of modifiers could change that. Sometimes you just want to match everything and there’s a couple of ways to do that.

You can pick an obscure character and apply a don’t match character range with it ie [^`]+. This is not true match any character though. Or you can try [.\r\n]+ which doesn’t seem to work at all. (?:\r|\n|.)+ works fine, but as you’ll find out soon, it is notoriously slow as each time you use it, you are creating a new 3 way branching point because of the brackets.

The perfect way I’ve found is actually a nicer variation of the first idea:

[^]+

Which means ‘don’t match no characters’, a double negative that can re-read as ‘match any character’. Hacky, but works perfectly.

Avahi, setrlimit NPROC and lxc

Over the weekend I installed Avahi (the open source bonjour equivalent) and bumped into a strange error while trying to restart the service. /var/log/message says chroot.c: fork() failed: Resource temporarily unavailable. Searching the interwebs revealed it is an issue with LXC and setrlimit.

The setrlimit call can limit set cetain limitations on processes. One such limitation is NPROC, the number of processes that can have the same UID. Using setrlimit NPROC can enhance security by preventing unexpected forking, like when an attacker is trying spawn a new process. However, the server I am running on uses LXC, and avahi is installed on the host. In LXC, the containers themselves have isolation between one another, but the host sees all processes. The PIDs of container processes are remapped but their UIDs stay the same. Thus, you will get UID collisions where user 102 of container can refer to say ntp, while 102 of host can refer to avahi. Because the host sees and accounts for all processes, setrlimit on avahi (102) of say 3 processes will also count existing processes in containers with UID 102 (such as ntp) and thus breach the limit and unable to spawn.

The only way to solve this is to edit avahi.conf and set rlimit-nproc or just disable rlimits altogether using the --no-rlimits switch.

I guess as LXC and control groups becomes more common, developers will need to adjust their assumptions about users and processes.

List of Sandy Bridge LGA1155 H67/P67 motherboards that support VT-d

Since publishing my rant a few days ago, I’ve discovered a few more motherboards which CLAIM to support VT-d (Intel Virtualization Technology for Directed-IO). Of course you need a non-K flavour of Sandy Bridge CPU as well (ie i5-2400, i5-2500, i7-2600).

So here’s the list so far, which I’ll update as I find more:

- Intel DP67BG (Confirmed, see comment by Michael)

- ASRock P67 (All of them, last time I checked – i.e. Pro, Fatal1ty) (Seems to be bogus according to Brian)

- ASRock H67M (And all variants)

- Foxconn H67S and variants

- Foxconn P67A

- ASUS P8H67-V and P8P67? (See below)

- BIOSTAR TP67XE and variants

- BIOSTAR TH67XE and variants

There’s a good chance that if one of the H67/P67 boards from the same manufacturer (i.e. ASRock and Foxconn) have VT-d, all variants have it too. You can check by searching VT-d in the product manual.

Update 6/02/2011 – Latest Asus P8P67 manual (E6307) shows the VT-d option under “System Agent Configuration”. Is this a new addition or something I missed before I’m not sure. Quick check of other Asus boards ie P8P67 EVO or P8H67-M indicates they don’t have it. Exception is P8H67-V which seemed to have it from the beginning. It seems there’s hope for those with Asus boards.

Update 24/03/2011 – None of the boards except for the Intel one seems to support VT-d even if they claim to. There are continuing claims that P67/H67 doesn’t support VT-d.